Chroma subsampling

One of the many things that I am passionate about is digital video, and one of the factors that many seem not to be understand is the famous chroma subsampling, a process by which the amount of color information in the images that produce a video are reduced in order to make it easy transfer an dreproduce.

There are several explanations of how chroma subsampling works but I feel that none really illustrates the inner works, most explanations are centered more on the notation and relationship in pixels than in the complete picture of what truly happens, few include the history and reason of this technology. So inspired by Akamai’s Colin Bendell CTO article and John P. Hess’s excellent video for Filmmaker IQ, I share a bit about chroma subsampling.

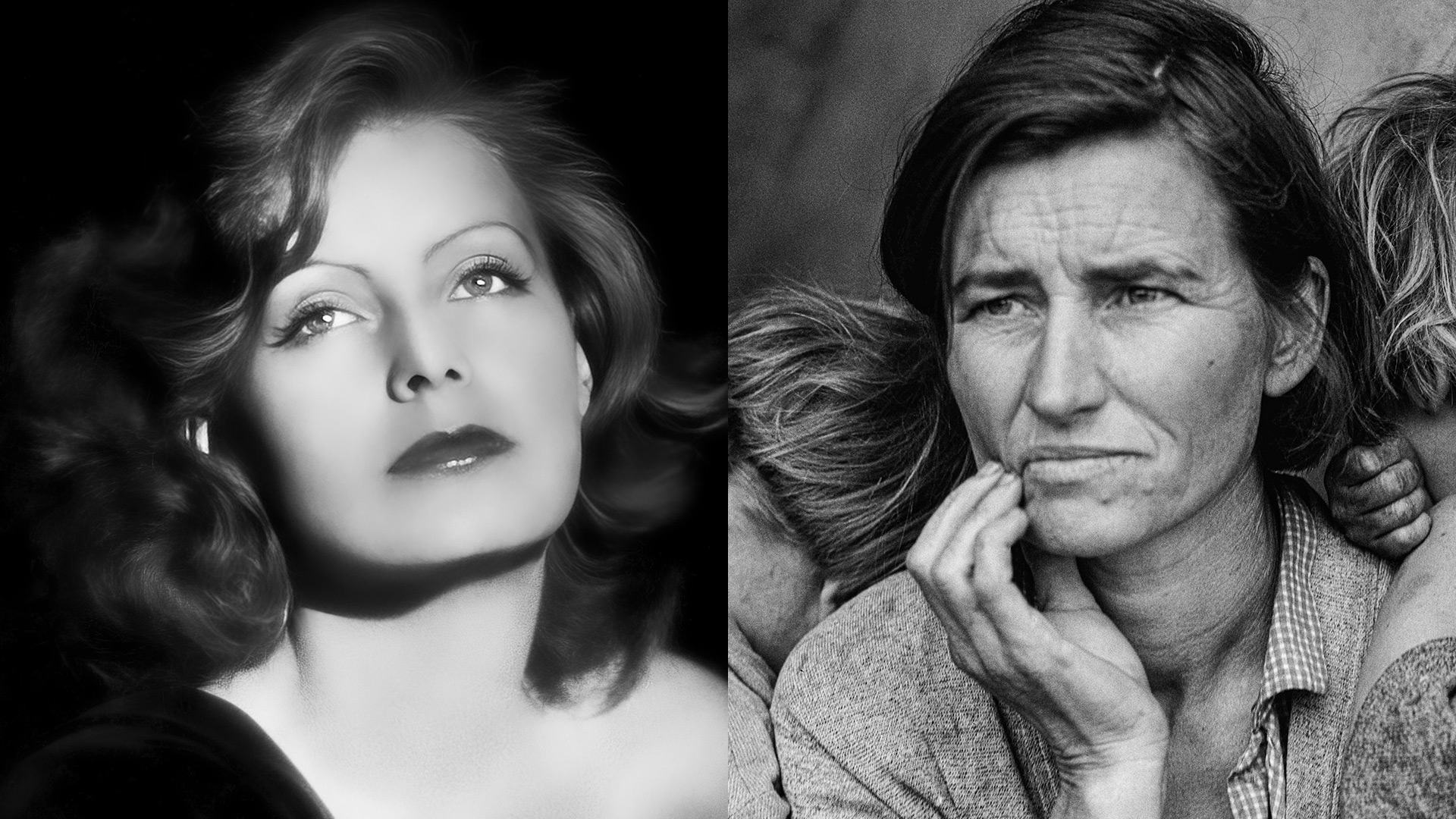

Photography, cinema and TV began their existence in black and white, and share this feature even with the first PCs, the reason in principle is almost the same, it is easier to reproduce an image based only on the amount of light at the time of his capture.

Color in film

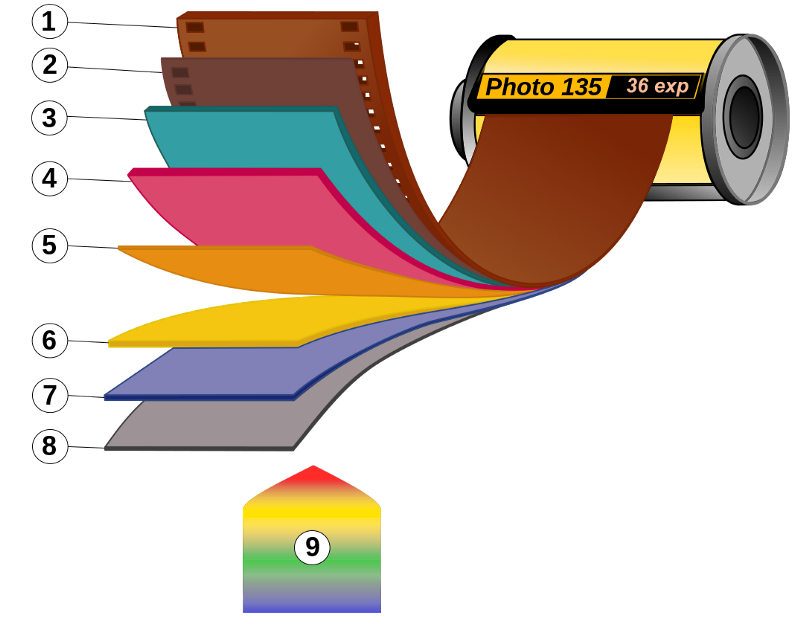

Today the simple photographic film only distinguishes the intensity of the light, but not its color, however, if you put a filter that only lets a certain color of light pass, the film will react only to that intensity of light, this is why a color film is really made up of three black and white films separated by color filters blocking certain color frequencies in each layer, and after photographing a picture, they are chemically processed to fix the image, to fade these filters and then to make each layer react by changing color.

There is something truly magical behind this science of color, human sight perceives light and color through the famous rods and cones, the former are more sensitive to the amount of light, and the latter to the color of light, specifically the red, green and blue light, however, to generate a film that captures these three primary colors what is required is to let only one color pass to each layer, hence each black and white film has a group of filters Cyan, magenta, yellow and black color, the same colors used in printing:

Color on the TV

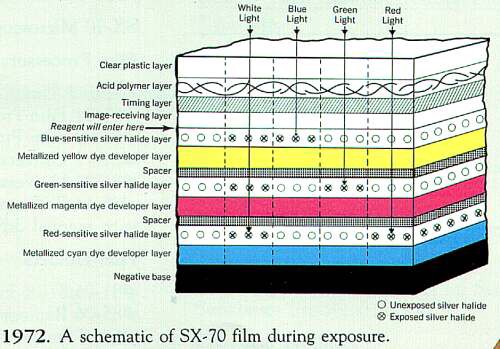

TV was born in Black and White or more precisely, in grayscale, the TV signal combines video and audio was transmitted by stations and received by the TV, it amplified, interpreted and reproduced the signal in the speakers of the TV and line by line on the screen, what was known as a cathode ray tube (CRT), something that can be appreciated thanks to the Slow Mo Guys video in slow motion:

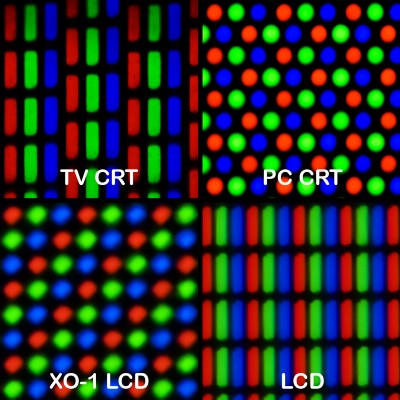

Some manufacturers had the idea of combining three cathode ray tubes in one, using a grid of colored phosphorus on the screen to show color images, a red, green and blue grid, however, there was a problem, transmitting a signal in RGB made it impossible for them to be compatible with the black and white signal, hence a new color format known as YUV was born.

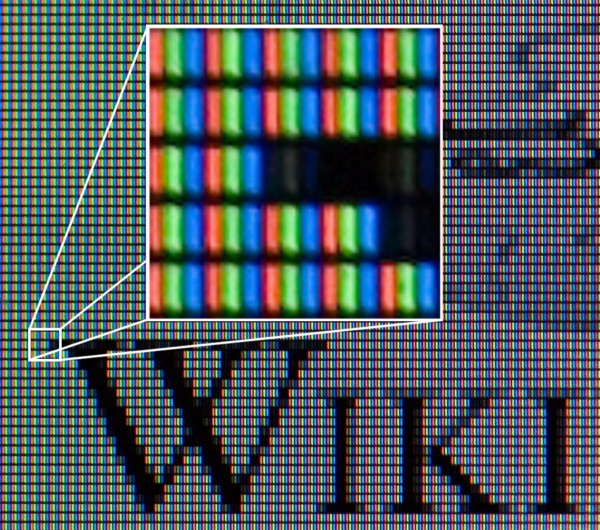

When we look at a color image on a screen, what we are really seeing are three images combined, one red, one green and one last blue, these images are interwoven in red green and blue or RGB subpixels and the patterns of these grids change according to the Technology used by each manufacturer:

From RGB to YUV and back to RGB

The solution to transmit a signal that was compatible with black and white TVs and with color TVs was found in the use of another color format, the famous YUV which converts an image composed of three channels, red, green and blue (RGB) to one that is composed of luminescence or luminance (light, black and white image compatible with old TVs) and two new channels, a blue to cyan chrominance and a red to green chrominance, so the color TV signal became compatible with the traditional black and white signal, the old TVs never noticed the difference, however, the color TVs decoded the two new signals embedded in the original, extracting the two chromas, and then, mathematically converting the luminance with the two chromas to the RGB format to be displayed on the screen… Does it sound complicated? Here are two images to illustrate the idea:

Human sight and bandwidth

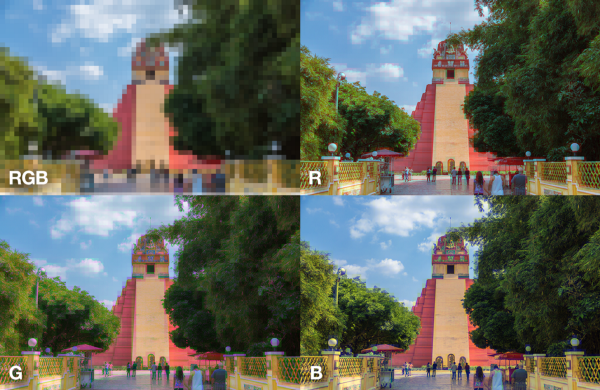

One of the factors that made transmission and archiving of color images complicated was that the space in frequency and storage of information was tripled with the adoption of color images, hence looking for ways to reduce the amount of information needed to transmit and store these images, and the hypersensitivity of human eyesight for black and white images (luminance) and poor sensitivity to color tonalities were taken into account, in fact, human sight has little sensitivity to blue light for example , something that can be seen in the following images in which I apply the mosaic filter first on all channels, and then on each of the channels, the last image is in the blue channel, where it is almost imperceptible:

The Chroma Subsampling

Taking advantage of that low capacity of human sight to perceive large amounts of color information in an image, chroma subsampling reduces the amount of pixels used in a color image, with sub-sampling being the most popular 4:2:2 in JPG and 4:2:0 in digital video, the one used by Blu-rays, the vast majority of digital cameras, YouTube, Facebook, Twitch, Periscope, etc.

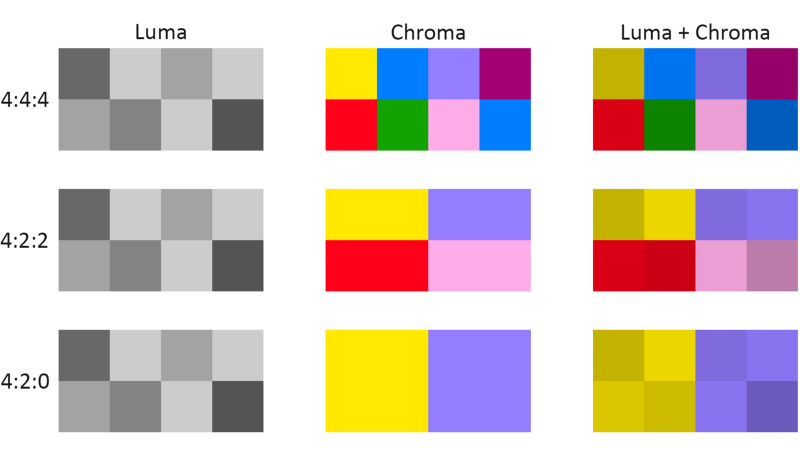

The way in which most video experts explain these subsamples uses pictures showing a small matrix of 4×2 pixels, thus exemplifying the relationship of luma with both chromas:

However, it is easier to see it in macro than in micro:

Digital manipulation

The chroma sub sampling is fundamental to facilitate the transmission and storage of digital video, however one of the problems that entails is the reduction of quality at the time of performing some digital manipulation, hence the majority of video cameras that use the chroma subsampling 4:2:0 be considered for common consumers, those who use 4:2:2 are considered for semi-professionals and those who use 4:4:4 are considered for professional use, not to mention 12 or 14 bit RAW formats.

One of the challenges of digital video is the famous chroma key, when a green or blue background is used to make it “transparent” or “invisible”, and it is interesting to know that blue color is mostly used in film because it was quite sensible to blue light, and green is used in digital because of the CMOS bayer pattern on digital sensors, which have two green subpixels for each red and blue subpixel, color red is not used in chroma keying since the human skin has red tones, as well as the strong perception of that color by the human eye:

Interesting facts about the YUV

When director Michael Bay decided to make the Transformers movie, he took into consideration the loss of the red color in digital formats and decided to change the tone of Optimus Prime from a bright red to a more blue and purple color.

Many people believe that using a TV as a PC monitor will give the same results, however they ignore that a monitor uses RGB colors at 8 bits per channel, equivalent to a chroma subsampling 4:4:4, while an average TV works in YUV 4:2:2.

The Rec.709 color space is a standard for TV signal transmission, the Rec.2020 or HDR space is currently being adopted, however among its specifications it is established that it will always use a chroma subsampling 4:2:2 and 4:2:0, increasing the detail only in the luma but not in the chromas, if the purpose was to improve the quality of the image, they would have invested more in the color.

Adobe Premiere, Final Cut, iMovie and most video editing software (not including DaVinci Resolve) have no way of previewing the loss of color in a YUV 4:2:0 space, something that would be extremely useful while editing, as you can do in Photoshop when viewing in CMYK mode.

I originally published this content on Medium in Spanish, but because of the censorship in that social media platform, I move it here.